GCP cloud resources

We advice to run Deeploy on the following managed GCP services:

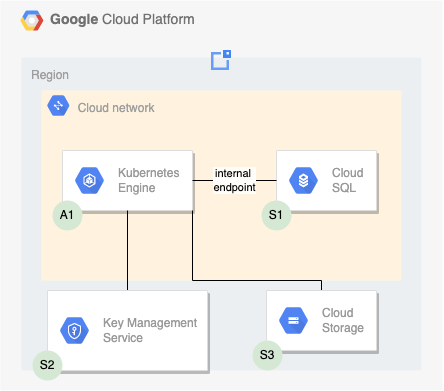

The following shows a high level GCP reference architecture:

For production workloads, we advise to manage the cloud resources in code. Google provides multiple providers to manage you infrastructure as code. See the Terraform documentation for Terraform/OpenTofu providers.

Google Kubernetes Engine

We suggest using a managed Kubernetes cluster (Stateless): Google Kubernetes Engine (GKE). To set up GKE for your Deeploy installation, follow the steps outlined in the GKE guide. Keep in mind the following specific considerations:

- For normal usage, Deeploy requires approximately 3 medium nodes; minimal requirements: 3 (v)CPU and 6 GB RAM.

- Kubernetes version: we advise to use only standard supported versions to prevent extra costs.

- Enable cluster autoscaling. This prevents running into resource limits, but take into account this also results in dynamic costs.

For GPU support we recommend attaching an autoscaling node group with your preferred GPU node type that can scale to 0 to your cluster. Alternatively use Karpenter as a flexible scheduler. To make sure no other pods will be scheduled on GPU nodes you can add the following taint to your node pool:

taints:

- key: nvidia.com/gpu

value: present

effect: NoSchedule

When specifying a GPU node for a Deeploy deployment, automatically the nvidia.com/gpu label will be applied.

To make sure you can select the nodes that are scaled to 0 in Deeploy, add a list of node types to the values.yaml file when you install the Deeploy Helm chart.

Read more about our NVIDIA integration here

Google Cloud SQL for PostgreSQL

To set up Cloud SQL for PostgreSQL for your Deeploy installation, follow the steps outlined in this guide. Take into account the following considerations:

- Consider enabling automatic storage increase to prevent manual interventions, to accommodate the amount of data increasing over time.

- Align the network configuration of the database with the GKE cluster using for example a private IP. This will allow for data transfers over the internal network.

- Implement best practices for backing up and restoring data at any point in time, as described in this article.

- Create a separate user with admin rights only on the required databases (

deeployanddeeploy-kratos). Save the user credentials to use in the Helmvalues.yamlfile that you use in the Deeploy Helm installation.

Database configuration

Make sure that the Postgres database server has the following two databases:

deeploydeeploy_kratos

- A single user should have administrative rights on both databases.

- The databases should have at least one (public) database schema.

Google Cloud storage

To set up a Google Cloud storage, use this guide. Additionally, keep in mind the following considerations:

- We assume you have created a service account. Create a IAM policy binding with the following role which is scoped to the resource that should be administered by Deeploy:

roles/storage.admin - Create a private access endpoint for your VPC. This will allow for data transfers over the internal GCP network.

- Check the values that need to be added to the Deeploy Helm chart here

Google Key Management System

To set up a Key Management Service, use this guide, keep in mind the following considerations:

- You need to key ring a key ring and a key within it, check the values that need to be added to the Deeploy Helm chart here

- Create a IAM policy binding for the created key with the following role:

roles/cloudkms.cryptoOperator

Resource health

We suggest setting up Cloud Monitoring for monitoring and alerting related to resource health. If you suspect an issue with your GCP Cloud Resources, check out the service health dashboard.

Estimation of costs

Next to the Deeploy license costs, GCP will bill you for the cloud resources. Check the expected costs with the GCP pricing calculator.

Next steps

- Get SMTP credentials

- Configure DNS and TLS

- Helm install Deeploy