Configure your Azure Cloud Resources

Installation is only required for teams on a Private Cloud plan

Azure requires multiple resources for Deeploy to operate successfully.

- Azure Marketplace

- Azure CLI

The complete Deeploy cloud setup is available in the Azure Marketplace. This is by far the easiest way to set up Deeploy.

In order to install the Azure cloud resources without the marketplace, it is recommended to use the Azure CLI, but all steps can also be performed using the Azure Portal. In the code snippets, default values are used that can be adjusted according to your needs.

Create Azure Service Principal

To enable Azure AD to handle Identity and Access Management functions, it is necessary to register Deeploy with an Azure AD tenant. The following code snippet in Azure Bash Shell can be used to create a Service Principal with access to the Azure resources.

az ad sp create-for-rbac -n deeploy --skip-assignment

Once the creation of the Service Principal is complete, a set of attributes, such as the tenant id, client id (app id), and client secret (password), will be returned. These values are important and should be recorded as they will be required later during the installation of the Deeploy software using Helm chart. Additionally, if needed, multiple Service Principals can be used for the services.

Create Deeploy core components

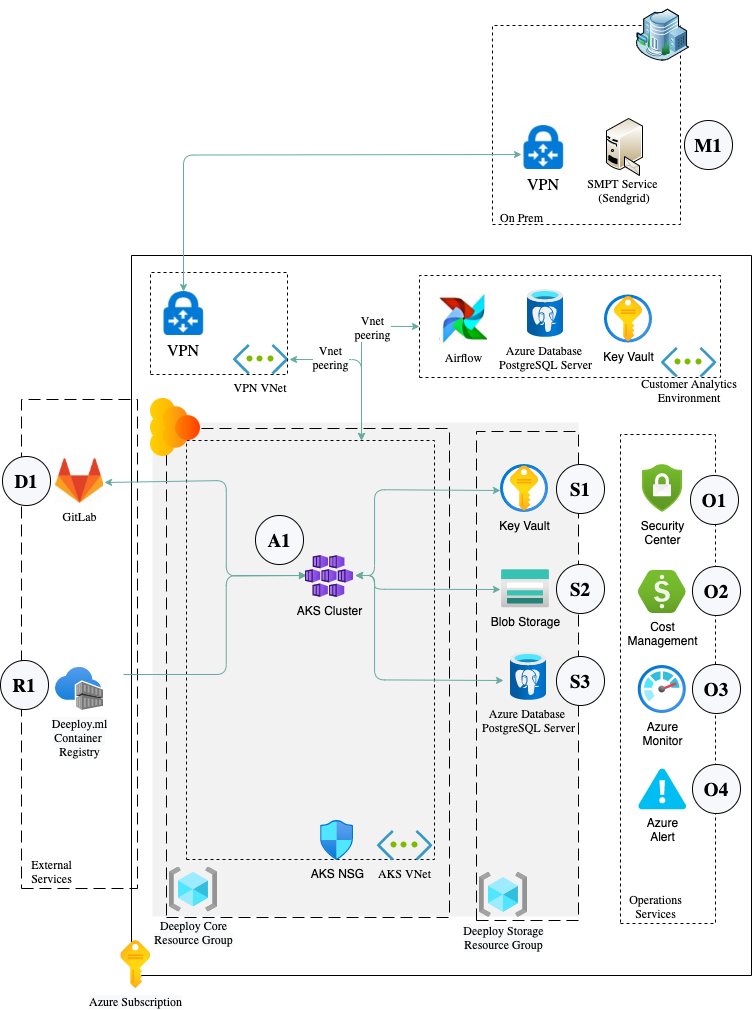

The following is an overview of a typical Azure client deployment, represented by the grey section. Annotations continue below the image.

Deeploy Core components

- A1 The container platform at the core of the Deeploy.ml solution using Azure Kubernetes Service (AKS)

- S1 Storage of Secrets & Certificates using Key Vault

- S2 Persistent storage of model files using Blob Storage

- S3 Storage of configuration, parameter & relational data using Database for PostgreSQL

Supporting services

- D1 Links to Azure DevOps (or other code / model repository like GitHub, GitLab for reference links)

- M1 SMTP service either public (like Sendgrid) or privately hosted SMTP Servers for outgoing emails

- R1 Container Registry for latest version of Deeploy application containers

Monitoring and operations

- O1 Security monitoring using Azure Security Center

- O2 Azure cost management & billing

- O3 Azure Monitor including Metrics

- O4 Azure Alerts

We have prepared an ARM template that can be used to spin up all the necessary components. The parameters in the template can be easily adjusted according to your specific requirements.

az deployment sub create \

--name Deeploy \

--location deployment().location

--template-uri "https://raw.githubusercontent.com/deeploy-ml/iac-solution-templates/main/azure/deeploy.mainTemplate.json" \

--parameters coreResourceGroupName=deeployCoreResourceGroup \

storageResourceGroupName=deeployStorageResourceGroup \

clusterName=deeploy \

servicePrincipalClientId=<your-service-principle-client-id> \

servicePrincipalObjectId=<your-service-principle-object-id> \

servicePrincipalSecret=<your-service-principle-secret> \

dnsPrefix=deeploy \

osDiskSizeGB=0 \

minAgentCount=1 \

maxAgentCount=5 \

desiredAgentCount=3 \

agentVMSize=Standard_DS2_v2 \

linuxAdminUsername=deeploy \

sshRSAPublicKey=<your-public-key> \

dbName=deeploy \

dbAdministratorLogin=deeploy \

dbAdministratorLoginPassword=<your-db-admin-password> \

dbTypeName=B_Gen5_1 \

dbTier=Basic \

dbCapacity=1 \

dbSizeMB=51200 \

dbFamily=Gen5 \

keyVaultName=deeploy \

saName=deeploy \

saType=Standard_LRS

GPU support

For GPU support we recommend attaching an autoscaling node group with your preferred GPU node type that can scale to 0 to your AKS cluster. Alternatively use Karpenter as a flexible scheduler. To make sure no other pods will be scheduled on GPU nodes you can add the following taint to your node pool:

taints:

- key: nvidia.com/gpu

value: present

effect: NoSchedule

When specifying a GPU node for a Deeploy deployment, automatically the nvidia.com/gpu label will be applied.

To make sure you can select the nodes that are scaled to 0 in Deeploy, add a list of node types to the values.yaml file when you install the Deeploy Helm chart.

Firewall configuration

This step needs to be completed for both Azure Marketplace and Azure CLI configurations

To ensure that the cluster nodes can access the Azure PostgreSQL database, the final step involves specific actions based on the database tier being used:

- For the Basic tier, it is not possible to whitelist the Virtual Network (VNet) where the nodes are located. In this case, a firewall rule needs to be created on the DB server that whitelists the IP range encompassing all IPs of the AKS cluster nodes. The following steps need to be taken:

- Obtain the IP range from the VNet of the AKS cluster nodes.

- Add the Service Endpoint created above as a firewall rule on the DB server.

- For higher tiers, it is recommended to create a VNet rule on the DB server that allows access from the subnet the AKS nodes are in. The following steps need to be taken:

- Create a

Microsoft.SqlService Endpoint in the VNet of the AKS cluster nodes. - Add the Service Endpoint created above as a firewall rule on the DB server.

- Create a